TL;DR: To assess learning outcomes effectively, align assessments with clear objectives, mix formative and summative methods, and connect results to business KPIs. Use an LMS for analytics (completion, mastery, retention, time-on-task), add practical simulations for skill proof, and apply Kirkpatrick’s model to evidence impact at reaction, learning, behavior, and results levels.

Why do many organizations struggle to assess learning outcomes with confidence?

If your teams complete mandatory courses but performance gaps persist, you’re not alone. Many HR and L&D leaders face three common blockers: misaligned assessments, overreliance on theoretical quizzes, and limited visibility beyond course completion. To assess learning outcomes accurately, you need a plan that links learning objectives to the right assessment methods and uses analytics to show knowledge retention and behavior change.

How to automate compliance reporting? Read here.

- Misaligned objectives → tests: Objectives say “perform,” assessments only ask “recall,” so results don’t predict performance.

- Knowledge vs. skill proof: Multiple-choice questions measure recall but not procedure, judgment, or practice.

- Data blind spots: Completion rates don’t reveal understanding, confidence, or on-the-job transfer. Without analytics, it’s hard to show ROI.

The good news: a modern LMS and a structured model can fix this. Start by clarifying outcomes, then select the right mix of learning assessment methods, from quick knowledge checks to realistic simulations, and track results with learning analytics.

What assessment methods best measure knowledge, skills, and retention?

Use a layered approach that blends formative and summative assessments. This helps you measure both immediate learning and long-term retention while ensuring evidence of competence, not just recall.

Formative vs summative assessment: When should you use each?

- Formative assessments (during learning): low-stakes checks that guide progress and feedback.

- Examples: micro-quizzes, flashcards, interactive polls, reflection prompts, quick scenario questions.

- Use for: identifying misconceptions early, keeping engagement high, adjusting paths.

- Summative assessments (end of learning): high-stakes proof of mastery.

- Examples: final exam, capstone assignment, certification test, task simulation.

- Use for: compliance sign-off, role-readiness, promotion/credentialing decisions.

Quizzes, assignments, and simulations: What’s the right mix?

- Quizzes: Efficient for knowledge checks and spaced retrieval. Use item types beyond single-answer MCQs (multi-select, hotspot, drag-and-drop) to reduce guesswork and better assess learning outcomes.

- Assignments: Evidence of understanding and synthesis. Rubrics clarify expectations and make scoring consistent across evaluators.

- Simulations: Best for practical validation. Branching scenarios, software sims, or role-play scoring verify decisions, procedures, and communication skills.

Practical vs theoretical assessment: How do you balance them?

- Theoretical (concepts): test definitions, policies, and frameworks. Quick to scale but limited for performance prediction.

- Practical (application): evaluate real tasks and decisions. Higher validity for roles with risk, compliance, or customer impact.

- Balanced strategy: 30–40% theory for foundational knowledge, 60–70% application for role transfer. Increase practical weighting for safety-critical roles.

How do you apply Kirkpatrick’s model to measure training impact end-to-end?

Kirkpatrick’s evaluation model offers a simple, executive-ready way to connect assessments with outcomes:

- Reaction: Did learners find it relevant and engaging?

- Measure: post-course survey, NPS, perceived confidence.

- Learning: Did knowledge/skills improve?

- Measure: pre/post scores, rubric-scored assignments, simulation accuracy.

- Behavior: Are they applying skills on the job?

- Measure: manager checklists, observation, system usage metrics (e.g., CRM adherence).

- Results: Did business KPIs move?

- Measure: fewer incidents, faster onboarding time-to-productivity, higher CSAT, reduced error rates.

Tie each level to clear metrics in your LMS and business systems. For example, pair a summative simulation pass rate with a 60-day reduction in error tickets for a strong training evaluation model story.

Which LMS analytics matter most to measure progress and retention?

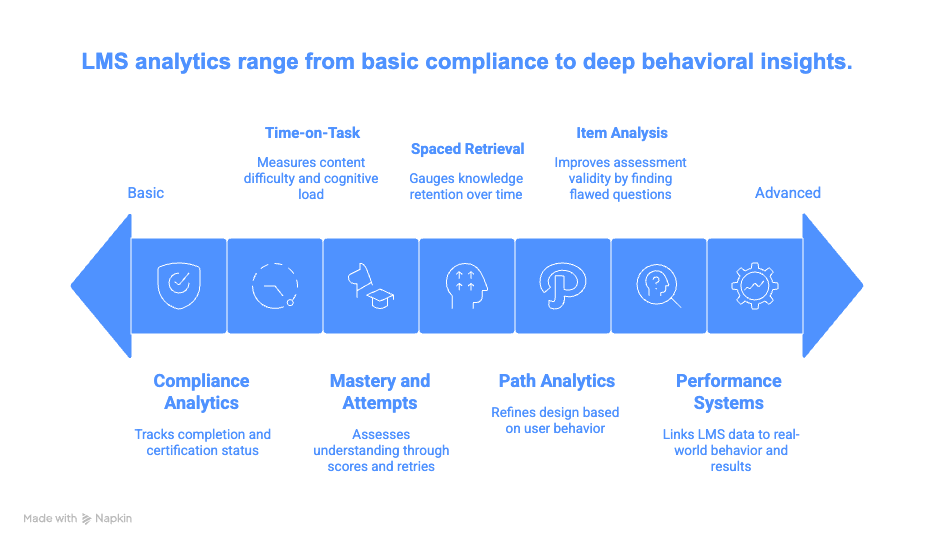

To move beyond completion, instrument your LMS assessments with data that explains progress and predicts retention.

- Mastery and attempts: first-pass vs. best-pass scores, number of attempts per item.

- Time-on-task: identifies content that’s too easy or too hard; correlates with cognitive load.

- Item analysis: discrimination index and difficulty to find flawed questions and improve validity.

- Spaced retrieval performance: correctness over time to gauge knowledge retention measurement.

- Path analytics: drop-off points, content replays, hint usage to refine instructional design.

- Compliance analytics: due/overdue, auto-reminders, audit trails, certificate status.

For deeper insights, link LMS data with performance systems (quality, safety, sales) to quantify behavior and results. See practical reporting examples here: LMS reporting and analytics trends and product reporting capabilities here: Smart Arena LMS reports.

How do you design an assessment plan that scales across regions and roles?

Use this five-step blueprint to standardize assessment quality across multiple sites and languages:

- Define outcomes as behaviors: write objectives with a measurable verb, context, and performance standard (e.g., “Complete a PPE check in under 2 minutes with zero misses”).

- Map methods to outcomes: recall → quiz; judgment → scenario; procedure → simulation/observation; compliance → exam + attestation.

- Build rubrics and thresholds: define pass criteria, critical errors, and partial credit; ensure inter-rater reliability.

- Localize, don’t just translate: adapt examples, regulations, and UI terms; validate item difficulty post-translation.

- Close the loop: review item analysis monthly, retire poor questions, and refresh content using analytics signals.

Common mistakes in learning assessment: What should you avoid?

- Testing trivia instead of job-critical decisions.

- One-and-done exams without spaced reinforcement.

- Rubrics that are subjective or not shared with learners.

- Ignoring accessibility and multilingual equity.

- No linkage to KPIs, so success is invisible to executives.

Stop overpaying for LMS licenses and start investing in ROI.

How does Smart Arena help you assess learning outcomes at scale?

Smart Arena combines an enterprise-grade LMS with AI-powered course creation to help HR and L&D teams assess learning outcomes accurately and prove impact—without adding admin burden.

- Assessment variety: build quizzes, assignments, and branching simulations aligned to competencies.

- AI-assisted item generation: draft questions from your policies or SOPs, then refine with difficulty and discrimination checks.

- Learning analytics: dashboards for mastery, retention, item analysis, and compliance—with exportable, audit-ready trails.

- Automation: reminder cadences, recertification rules, and supervisor sign-offs.

- Multilingual support: deliver localized assessments with role-based access and mobile delivery for frontline teams.

- Integrations: connect HRIS and business systems to correlate learning with performance.

Which resources should you read next to deepen your analytics strategy?

- Trends in LMS reporting and analytics (awareness): frameworks, metrics, and dashboards to measure what matters.

- Smart Arena LMS reporting features (consideration): see compliance reports, mastery tracking, and item analysis in action.

What is the most reliable way to assess learning outcomes for compliance training?

Combine a proctored summative exam with role-specific simulations and manager attestations. Use LMS analytics for item analysis and auto-recertification to ensure sustained compliance.

How do I measure knowledge retention after a course ends?

Schedule spaced micro-quizzes at 2, 7, and 30 days, track correctness trends, and refresh items learners miss. Pair this with short scenario refreshers to reinforce application.

How do I measure knowledge retention after a course ends?

Schedule spaced micro-quizzes at 2, 7, and 30 days, track correctness trends, and refresh items learners miss. Pair this with short scenario refreshers to reinforce application.

When should I use formative vs summative assessment in corporate training?

Use formative assessments during learning to give feedback and correct misconceptions; use summative at the end to certify mastery and readiness for on-the-job tasks.

How can LMS analytics prove training impact to executives?

Map assessment results to business KPIs (incidents, time-to-productivity, CSAT). Show pre/post deltas, behavior observations, and trend lines in dashboards and exportable reports.

What’s the best way to balance practical vs theoretical assessment?

Weight 60–70% toward application in roles where errors are risky. Use quizzes for foundational knowledge, then validate performance with branching scenarios and observed tasks.

What should you implement this quarter?

- Write behavior-based outcomes; align each with the right assessment method.

- Adopt mixed methods: quizzes for recall, assignments for synthesis, simulations for skills.

- Instrument analytics: mastery, item analysis, spaced retrieval, and KPI linkage.

- Localize assessments and validate difficulty post-translation.

- Use Kirkpatrick to communicate impact beyond completion rates.

To assess learning outcomes, align behavior-based objectives to methods (quiz for recall, assignments for synthesis, simulations for skills), blend formative and summative assessments, and track mastery, item analysis, and spaced retrieval in your LMS. Apply Kirkpatrick to connect learning with behavior and results. Localize assessments and validate difficulty across languages. Link assessment data to KPIs (incidents, time-to-productivity) to prove impact.

Mini comparison table: Assessment methods

| Method | Best for | Pros | Watch-outs |

|---|---|---|---|

| Quizzes | Recall, terminology | Fast, scalable, good for spaced practice | May not predict performance |

| Assignments | Synthesis, judgment | Rich evidence, rubric-friendly | Scoring time and consistency |

| Simulations | Skills, decisions | High validity, safe practice | Setup complexity; needs clear rubrics |